One — Technical Setup (On-Site Optimisation)

Sitemap and robots.txt files

Sitemaps list all the URLs on a site and are used by search engines to identify which pages should be crawled and indexed. There are two types of sitemaps — HTML and XML. XML sitemaps are primarily for search engines while HTML sitemaps are primarily for users.

A robots.txt file tells search engines what content should not be indexed (specific pages which you don’t want to appear in SERPs).

Both sitemaps and robots.txt files are important for SEO as they help to increase crawling and indexing speed.

Page speed

Page speed (how quickly a web page loads) is important for both SEO and user experiences. Google has indicated that page speed is now a ranking signal. Ways to increase page speed include:

- Enable compression

- Optimise images

- Minify CSS, JavaScript, and HTML

- Remove render-blocking JavaScript

- Leverage browser caching

- Reduce server response time

- Use a content distribution network (CDN)

- Reduce redirects

GT Metrix is a helpful tool which provides insights into how well your site loads and recommendations on how to optimise it.

Optimise for Mobile

Now that Google has rolled out mobile-first indexing, it’s essential to optimise for mobile. This means switching to responsive design is the best way forward. However, if you’re planning to maintain both a desktop and a mobile version of your site, the content must be consistent across desktop and mobile versions of your site.

Mobile-friendliness is also very important in 2018 and all sites should be easy to use on mobile devices. Google’s Mobile-Friendly Test tool is helpful.

Dead links or broken redirects

Broken links send users to a web page (404 error) that no longer exists and are bad for both SEO and user experiences. Links become ‘broken’ when:

- A linked page is deleted

- When a URL address is changed without correctly updating the URL

- An incorrect URL is placed in a text link

If you have been using backlinking as an SEO method, be careful when making changes to your site’s URL structure as this could result in broken links. To avoid and fix 404 pages:

- Update content instead of removing it

- When updating your URL structure, use 301 redirects to redirect visitors to new pages

- If a 404 occurs because an external site is linking to you with an incorrect URL, reach out and request an updated link from the webmaster

To find 404 error pages that may be resulting in broken links, use a site crawler like Screamingfrog. For small sites, you can use the Check My Links Chrome extension.

Internal links

An internal link is a hyperlink that points at another page on the same domain. Internal links are useful for three main reasons:

- To assist users in navigating the website

- To spread ranking power/link equity across the site

- To help establish information hierarchy

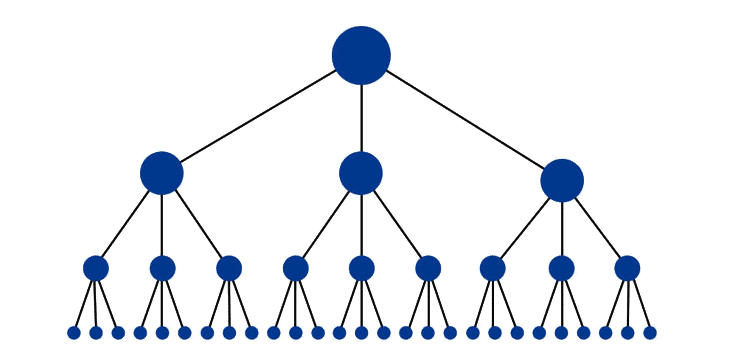

The optimal internal linking structure for a website is a pyramid-like shape as can be seen in the diagram below. It has the minimum number of links between the homepage (at the top) and any given webpage on the site, which helps to spread link equity throughout the site. It’s a common structure on websites with category and subcategory systems.

Read more about best practices for internal linking.

External links

An external link is a hyperlink that points at a page on another domain. If another website links to your site, this is an external link to your site, just as if you link out another website, this is an external link.

External links are one of the most important sources of ranking power, with search engines using a variety of metrics to determine the value of a given external link. These metrics include:

- The DA (Domain Authority) of the linking domain

- The anchor text in the link

- The number of links to the same page on the source page

This Moz article looks at external links in greater detail.

Simple URL structure

It’s SEO best practice to include your most valuable keyword in the URL, however, as search engines don’t like lengthy wordstrings, keep your URLs short. Include as few words as possible beyond the main keyword for the page.

Two — Content (On-Page Optimisation)

Content wordcount

Where content word count is concerned more, generally, is better. The highest performing web pages tend to be those with 1,000+ words.

Your pages should have a minimum of 100 words to rank on SERPs, with 500 words a good word count to aim for. When creating content to promote your products and services — and you need to deliver a great user experience — quality trumps quantity.

This Neil Patel article looks at why focused and comprehensive content ranks better.

Keyword mentions

The number of times a keyword is mentioned in the content of each page is important, but what is more important is readability and delivering great user experiences. Ideally, all mentions would be direct mentions, however, it’s fine to ‘break up’ a keyword to make the language more natural. Please see examples below.

| Keyword | Direct | Broken Up |

| Brisbane theatre | … attending a Brisbane theatre… | … attending a theatre production in Brisbane… |

| comedy show Brisbane | … presenting a comedy show in Brisbane… | … Brisbane Powerhouse is presenting a comedy show… |

H1 Tag

Use your keyword a minimum of three times on the body content of each page, with at least one mention in the first 100 words. This further helps bots to quickly identify what the page is about.

URL

Include the page’s most important keyword in the URL. Since Google’s Hummingbird update, you no longer need to worry about removing stop words from the web address.

Meta title and meta-description tags

Including the most valuable keyword in the meta title and meta description is helpful for both users and search engines in understanding what the page is about.

Google generally displays the first 50 – 60 characters of the meta title, so keep your meta titles under 60 characters and most of your title will be displayed in SERPs.

Meta description character counts were extended to 290 characters and then reduced back to 160 characters in 2018. This is likely to change again, but best practice at present is to aim for 120 – 155 characters.

While search engines don’t use meta description or title content as a ranking signal, compelling meta descriptions and titles attract user interest in SERPs and help to increase the click through rate (CTR). CTRs are seen by Google as a ranking signal.

This Moz article is a good source of information on meta description length.

Use LSI and synonyms in copy

Latent semantic indexing (LSI) involves including keywords that are thematically related to (share the same context as) your keyword. For example, LSI terms for ‘coffee’ include ‘cafe’, ‘coffee shop’, ‘latte’ and ‘espresso’.

LSIGraph is a great LSI generation tool that’s free to use.

Including synonyms of your keywords is helpful for both SEO and usability. By using synonyms of your keywords when creating new content, you can focus more on usability and less on showing Google that the page is relevant for a particular keyword.

Optimise alt tags and filenames

Search engines can’t see images so you need to tell them what each image means and how the information it conveys is relevant to the other on-page content. To do this, you need to optimise (name) each alt tag and the filename (if it isn’t already named).

- Keep each alt tag under 125 characters

- Use hyphens (not underscore or spaces) to separate words in filenames

- Give the alt tag and filename a similar title

You may find this article helpful when naming alt tags and image files.

Duplicate content

Content that’s duplicated across two or more pages does more than deliver a substandard user experience, it can also have a negative effect on your SEO. Search engines have issues with duplicate content for three main reasons:

- They don’t know which version of the content (URL) to include in the indices

- They don’t know which version to rank in SERPs

- They don’t know where to direct the link metrics (link equity, authority, trust, etc.)

This can result in a loss of rankings and traffic, along with Google penalties.

This Moz article is a good guide to duplicate content in an SEO context.

Three — Website Migration Checklist

During website migration, it is recommended to change as little content as possible at the one time. Once the migration is complete, wait for traffic to stabilise before changing any on-page elements. This way, it is easier to pinpoint the reason for any drops in traffic.

Keep control of the old domain

The old domain must redirect to the new one. This ensures the domain authority and link equity of the old website is passed on to the new website. Losing the links from the old domain may mean losing your website’s rankings once the migration is complete.

Pre-migration

Migrating a website to a new domain often involves a few hiccups and may involve a little downtime, so to be on the safe side, plan your migration during a slow period.

Content

Avoid changing content on high traffic pages, ranking pages and linked-to pages until after the migration has been completed and traffic and rankings have stabilised.

If it’s absolutely necessary to remove pages/content or rename URLs, map old URLs to new URLs in preparation for 301 redirects.

Website structure

A well-thought-out site structure is at the core of SEO as it provides sitelinks and enables better crawling and indexing, which is vital for showing Google which pages are the most important.

An easily navigable site also provides a great user experience and keeps visitors on your site longer, both of which are important for SEO. When planning out your new website structure, start by comparing the new site with the old website architecture. Then:

- Crawl the website and compile a complete list of URLs. This ensures all pages are accounted for on the new site. Again, if you need to remove any pages, map old URLs to new URLs for redirection.

- Identify any broken links that need fixing.

- Identify redirecting links that need to be updated to avoid redirect chains.

- Compare new vs old navigation and user experience.

- Make sure all conversion elements are present on the new website. These are:

- Unique selling point (USP)

- Trust signals and social proof

- Calls to action

- Clickable phone numbers

- Images or videos

- Contact forms

- Name, address, telephone number and hours on the footer

Good website design is all about having a great website structure that puts all the pieces together in a logical way that’s appealing to both users and search engines.

Post-migration

Once you’ve migrated to the new site or domain, perform a thorough, in-depth post-migration check. You will need to:

- Make sure all redirects work (site-wide, individual 1:1 redirects, broken links redirects, updated 301 redirects).

- Self-canonicalise all new pages and verify that there are no canonicalisations to the old site.

- Address duplicate content issues that may pop up (i.e. multiple versions of URLs, HTTPS or HTTP, www or non-www, etc.).

- Check for no-index result pages (for sites with a search function).

- Update all internal links.

- QA the website to make sure there are no bugs and all buttons are working.

- Check page speed, mobile friendliness and mobile content visibility.

When migrating a website, following a clear plan is the best way to avoid a loss of rankings and traffic, with the pre-migration and post-migration phases just as important as the actual migration phase. To avoid Google penalties and a substantial loss of your hard-earned rankings and website traffic, consider how search engines will react to the migration and act accordingly.

Content that’s duplicated across two or more pages does more than deliver a substandard user experience, it can also have a negative effect on your SEO. Search engines have issues with duplicate content for three main reasons:

- They don’t know which version of the content (URL) to include in the indices

- They don’t know which version to rank in SERPs

- They don’t know where to direct the link metrics (link equity, authority, trust, etc.)

This can result in a loss of rankings and traffic, along with Google penalties.

This Moz article is a good guide to duplicate content in an SEO context.